If the ongoing demise of newspaper readerships was not enough to persuade prospective journalists to pick an alternative career, there’s more bad news in the offing from America: computer-generated stories.

Google has invested £622,000 in a Reporters and Data and Robots (RADAR) scheme in Britain, through the Press Association (PA) which has already started to produce computer-generated news stories.

This involves increasingly sophisticated Artificial Intelligence Natural Language Generationsoftware which processes information and transforms it into news copy by scanning data, selecting an article template from a range of preprogrammed options, then adding specific details such as place names.

Try to keep your mind from wandering when reading this example from local Wolverhampton paper the Express and Star, part of PA’s RADAR experiment:

The latest figures reveal that 56.5 per cent of the 3,476 babies born across the area in 2016 have parents who were not married or in a civil partnership when the birth was registered. That’s a slight increase on the previous year.

Marriage or a same-sex civil partnership is the family setting for 43.5 per cent of children.

The figures mean that parents in Wolverhampton are less likely to be get married before having children than the average UK couple. Nationwide, 52.3 per cent of babies have parents in a legally recognised relationship.

The figures on births, released by the Office for National Statistics, show that in 2016, 34 per cent of babies were registered by parents who are listed as living together but not married or in a civil partnership.

The US news wire service Associated Press (AP) was one of the first to trial robo-journalism, producing computer-generated sports and finance stories in 2013. The Washington Postused a bot to report on results from the Rio Olympic Games in 2016.

Long on numbers, short on analysis

If it’s a numbers game, robots can source and produce news copy faster than any human. Football scores, medal tallies, company profits – anything where the numbers alone tell the story, the bot will do the job. It will, however be basic information only.

The Press Association aims to produce 30,000 such automated stories each month. But headline-grabbers they will never be, and for that journalists should be thankful. The topics are dry and statistics-based, reported in a one-note style which is data-heavy, short on analysis and utterly devoid of colour. If it were submitted by one of my journalism students, it’s the type of copy I would mark down for being boring.

The point is, this content could not be described as human interest, the meat and drink of UK tabloids. Such stories, with appropriately gripping headlines, sell papers and drive website traffic.

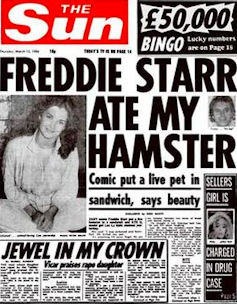

Rest assured, the famous Sun headline of 1986, “Freddy Starr ate my hamster”, was neither accurate nor written by a computer. It did wonders, however, for the paper’s circulation and will go down in history as one of our most famous tabloid headlines. It also needed a human imagination behind it.

Imagine computer-generated coverage of the forthcoming royal wedding. Would we be treated to a blow-by-blow account of the guests’ outfits? Who sat with whom and who wasn’t on the guest list? Of course not: the story would focus on numbers, historical statistics and not much else. And when it comes to big, serious, in-depth stories, bots would not be able to assimilate or reflect the kind of nuance and complexity involved in investigative journalism.

One aspect of the robot stories which does impress is the good standard of English: no aberrant apostrophes, spelling errors, or, horror of horrors – poor grammar. In this battle of robot versus human, the robot wins.

Trials and errors

But there is another serious issue here – the inevitability of computer error. Journalists make mistakes, and in the most serious cases of libel and defamation, they are jailed or sued. Automated software may not be designed to produce juicy stories in danger of being defamatory, but that doesn’t mean serious errors will not occur.

In the US, it has already happened. On June 22, 2017, the Los Angeles Times, one of the first media outlets to use automated software to compose some of its copy, reported that a 6.8 magnitude earthquake had hit the Pacific Ocean about ten miles from Santa Barbara.

The information was nearly a century out of date and related to an earthquake which hit Santa Barbara in 1925. The notification had been sent out in error by the US Geological Society. The vast majority of news outlets recognised the alert as a mistake and ignored it. Not the automated system at the LA Times, which duly processed it, resulting in publication. The paper suffered a major embarrassment and later explained what happened.

Another potential and indeed more serious threat is hacking. Fake news is one of this year’s most widely used phrases. If the LA Times can have a century-old earthquake hit the headlines, just think what the Russians could do.

So is computer-generated news a serious threat to the future of journalism? Certainly it cannot be dismissed. Giants like Google do not invest lightly, so there is clearly a potential market. News wires services like the Press Association, Associated Press and smaller regional providers have long affected the journalism jobs market here and abroad.

It is much more cost effective for a news organisation to subscribe to a news service than staff each story it wishes to cover. Larger news operations, from newspapers to TV stations, often use the service as a tip-off or starting point, prompting them to send reporters to get their own interviews and put their own spin on a story. Conversely, small local papers will often lift copy straight from the wires.

Neither approach is likely to change, and unless the British population’s news tastes alter dramatically, computer-generated stories are not destined for the front pages any time soon. Budding investigative journalists should perhaps not lose too much sleep.

Author: Christina McIntyre: Lecturer in Broadcast Journalism, Glasgow Caledonian University

Credit link: https://theconversation.com/robo-journalism-computer-generated-stories-may-be-inevitable-but-its-not-all-bad-news-89473<img src="https://counter.theconversation.com/content/89473/count.gif?distributor=republish-lightbox-advanced" alt="The Conversation" width="1" height="1" />